Setup Local LLMs#

Download and install Ollama from here

Run Ollama:

Download large language models by running

ollama pullcommand in terminal, for example:ollama pull llama3.1

Run the model

ollama run llama3.1

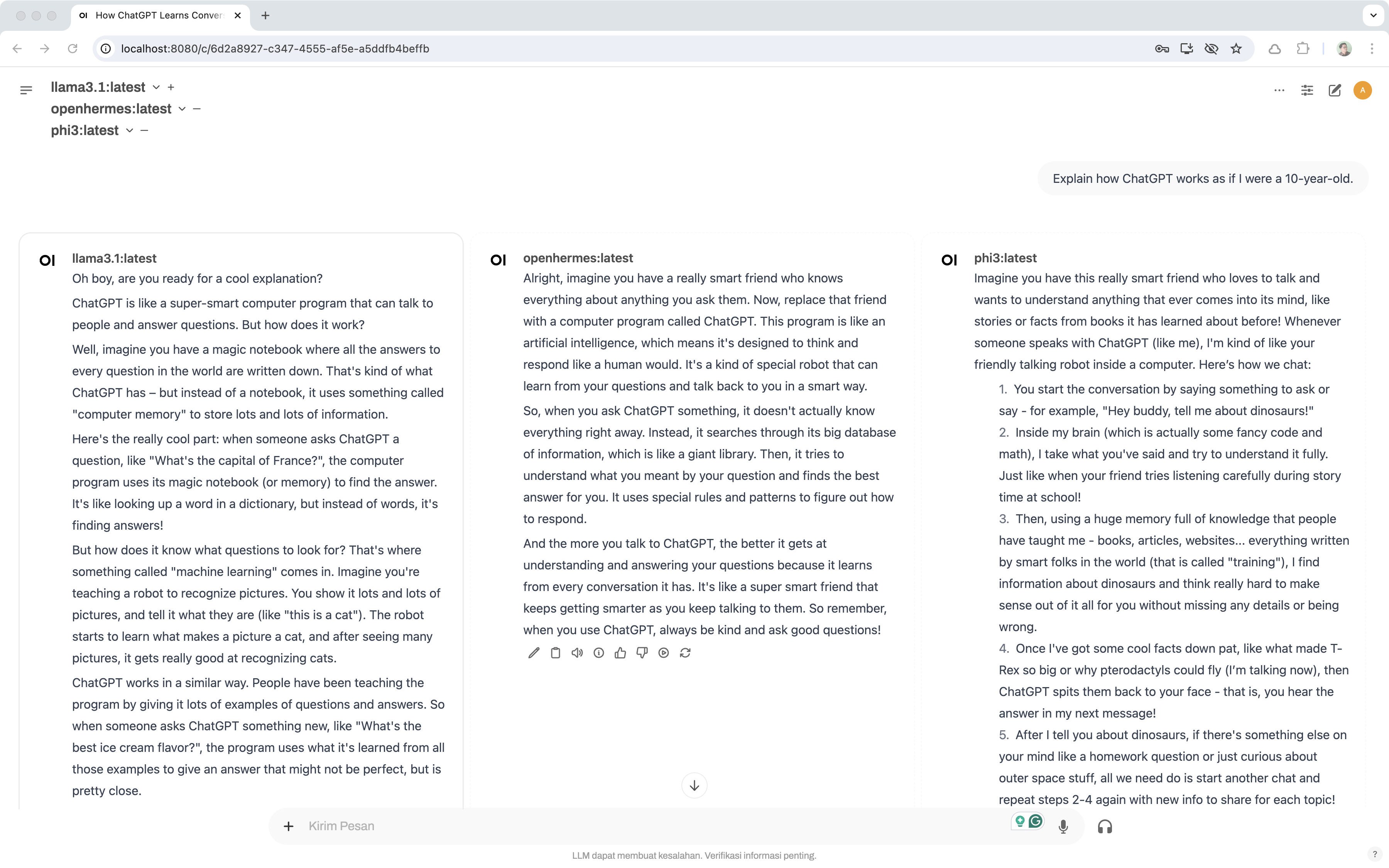

Optional: Use Open WebUI#

Install Open WebUI, from here

Run Open WebUI according the installation method you chose above

Open the browser and navigate to

http://localhost:8080