Linear Regression with SKLearn#

References:

import matplotlib.pyplot as plt

import numpy as np

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error, r2_score

from sklearn.model_selection import train_test_split

np.random.seed(123)

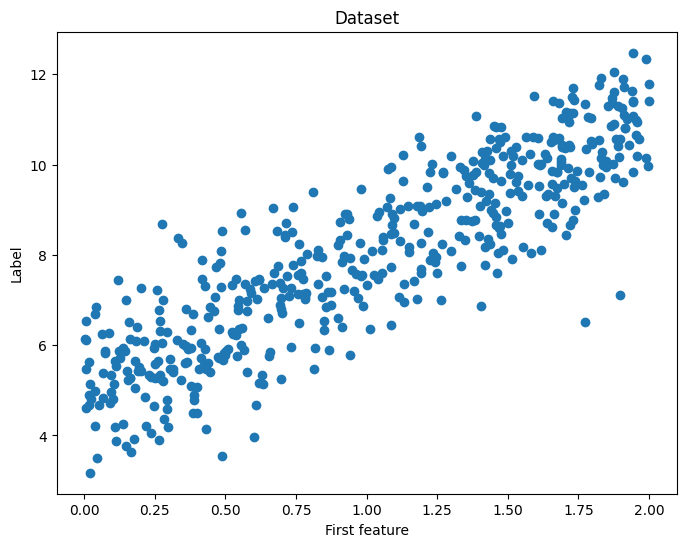

Dataset#

# We will use a simple training set

X = 2 * np.random.rand(500, 1)

y = 5 + 3 * X + np.random.randn(500, 1)

fig = plt.figure(figsize=(8, 6))

plt.scatter(X, y)

plt.title("Dataset")

plt.xlabel("First feature")

plt.ylabel("Label")

plt.show()

# Split the data into a training and test set

X_train, X_test, y_train, y_test = train_test_split(X, y)

print(f"Shape X_train: {X_train.shape}")

print(f"Shape y_train: {y_train.shape}")

print(f"Shape X_test: {X_test.shape}")

print(f"Shape y_test: {y_test.shape}")

Shape X_train: (375, 1)

Shape y_train: (375, 1)

Shape X_test: (125, 1)

Shape y_test: (125, 1)

Training#

regr = LinearRegression()

regr.fit(X_train, y_train)

LinearRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LinearRegression()

Testing#

y_pred = regr.predict(X_test)

y_pred[:10]

array([[10.23509009],

[ 7.96013802],

[ 8.24265637],

[ 5.71975515],

[ 4.91785424],

[ 5.64173755],

[10.77645888],

[ 6.28942254],

[10.91860508],

[ 6.24259349]])

y_test[:10]

array([[11.43392748],

[ 7.33861245],

[ 8.9137441 ],

[ 8.67468833],

[ 5.63189611],

[ 6.01422288],

[11.71427334],

[ 6.74555903],

[10.18985201],

[ 6.83689782]])

# The mean squared error

print("Mean squared error: %.2f" % mean_squared_error(y_test, y_pred))

Mean squared error: 0.87

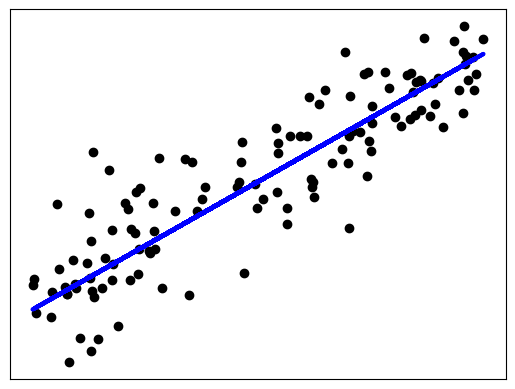

Visualize prediction output#

plt.scatter(X_test, y_test, color="black")

plt.plot(X_test, y_pred, color="blue", linewidth=3)

plt.xticks(())

plt.yticks(())

plt.show()