Hello, World of Machine Learning#

1. Sebelum memulai#

Pada Jupyter Notebook ini, kamu akan mempelajari dasar “Hello, World” pada machine learning, dimana alih-alih kamu memprogram secara eksplisit aturan-aturan pada suatu bahasa pemrograman, seperti C++ atau Java, kamu akan membangun sistem yang dilatih menggunakan data untuk memprediksi aturan-aturan yang menggambarkan keterkaitan antara data.

Bayangkan masalah ini: Kamu membangun sistem fitness tracking yang bisa mengenali aktifitas-aktifitas olahraga. Kamu mungkin memiliki akses ke data kecepatan jalan seseorang dan mencoba untuk memprediksi aktifitas orang tersebut berdasarkan kecepatannya menggunakan kondisi.

if speed < 4:

status = walking

Kamu selanjutnya bisa menambah kondisi untuk lari:

if speed < 4:

status = WALKING

else:

status = RUNNING

Kamu juga bisa menambahkan kondisi akhir untuk bersepeda:

if speed < 4:

status = WALKING

if speed < 12:

status = RUNNING

else:

status = CYCLING

Sekarang, coba pertimbangkan apa yang akan terjadi selanjutnya jika kamu mau menambahkan suatu aktifitas baru, misalnya golf. Tentu akan jauh lebih ambigu untuk menentukan aturan untuk aktifitas tersebut.

# Selanjutnya gimana?

Sangatlah sulit untuk menulis program yang bisa mengenali aktifitas bermain golf, jadi apa yang harus kamu lakukan? Gunakan machine learning!

Prasyarat#

Sebelum mencoba Jupyter Notebook ini, kamu perlu memiliki:

Pengetahuan yang solid tentang Python

Keterampilan pemrograman dasar

Yang akan kamu pelajari#

Dasar-dasar machine learning

Yang akan kamu buat#

Model machine learning pertama kamu

2. Apa itu Machine Learning?#

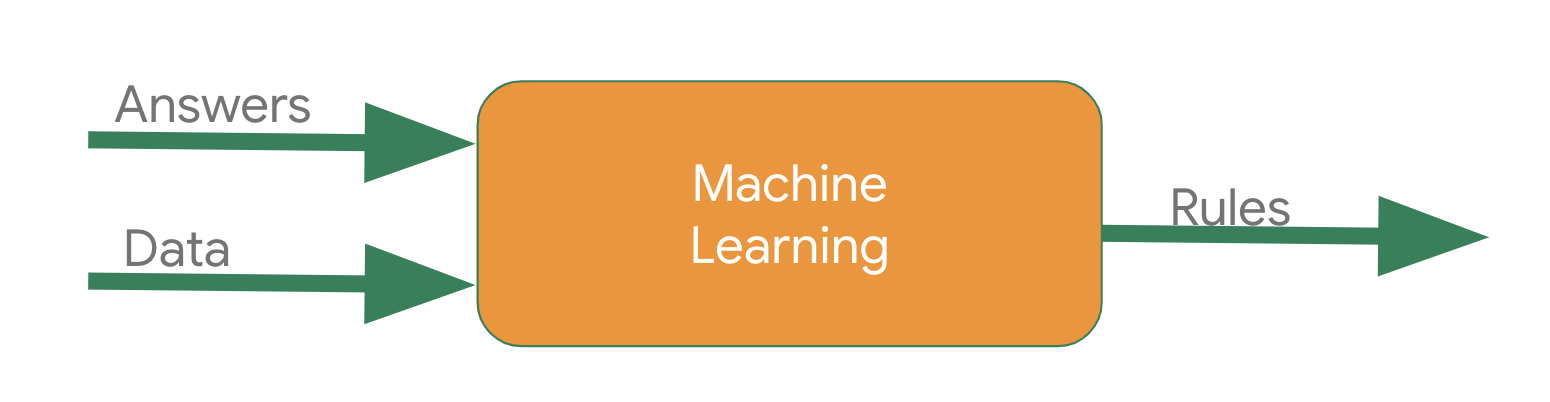

Mari kita lihat cara tradisional membangun suatu aplikasi yang direpresentasikan oleh diagram di bawah:

Kamu mengekpresikan aturan-autran menggunakan sebuah bahasa pemrograman. Aturan-aturan tersebut bereaksi terhadap data dan program kamu akan memberikan jawaban. Pada kasus deteksi aktifitas olahraga, aturan-aturan (kode yang kamu tulis untuk mendefinisikan tipe-tipe aktifitas) bereaksi terhadap data yang masuk (kecepatan gerak pengguna) untuk menghasilkan jawaban: yaitu output nilai dari fungsi untuk mendeteksi status aktifitas pengguna.

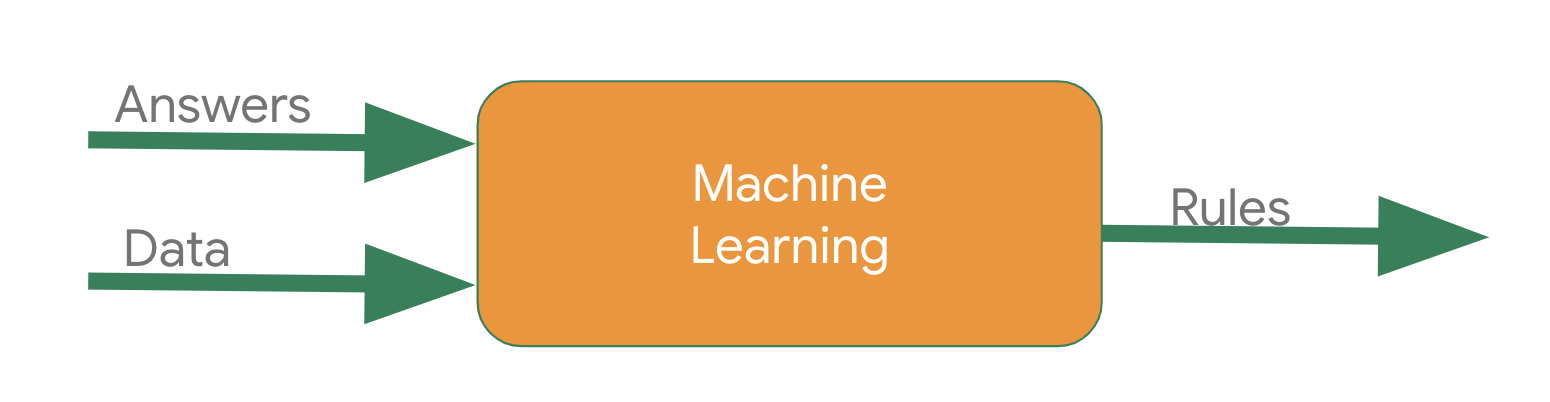

Proses mendeteksi status aktifitas menggunakan ML sebenernya lumayan mirip, hanya input dan outputnya saja yang berbeda:

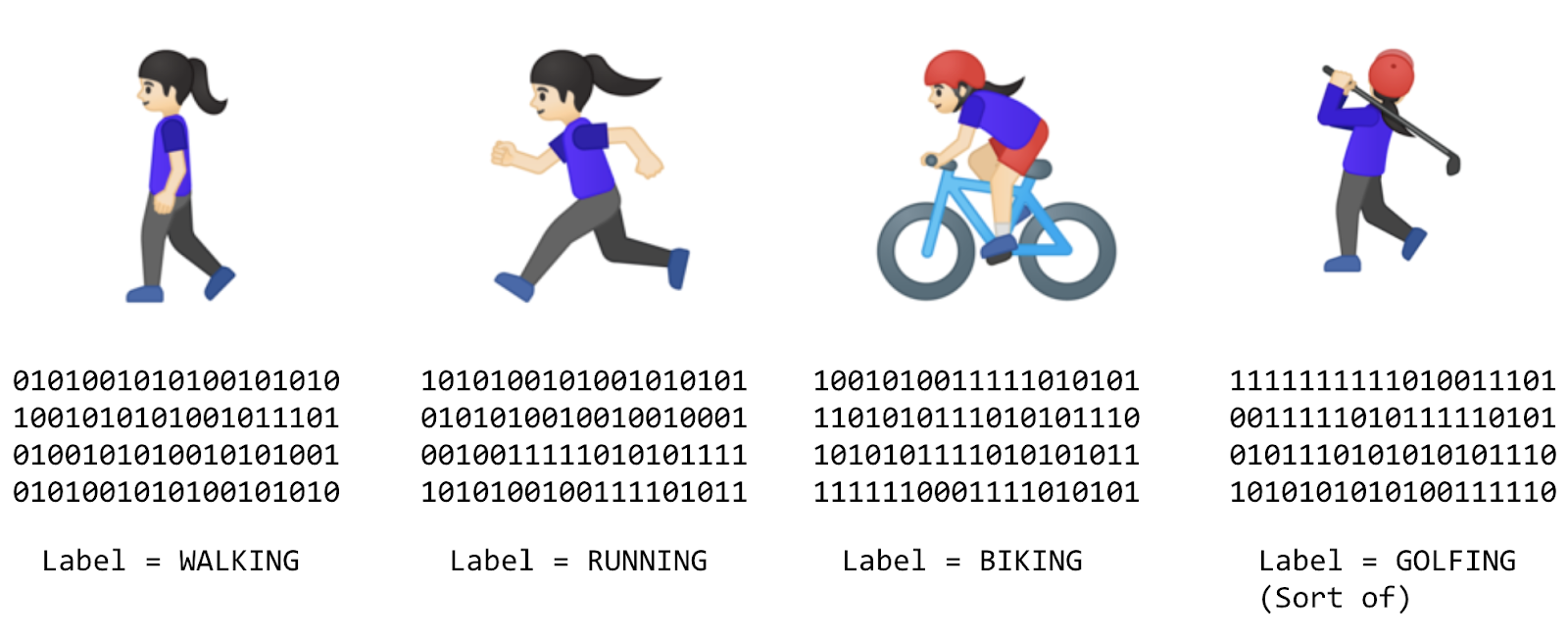

Daripada mencoba mendefiniskan aturan-aturan dan mengkespresikannya di dalam sebuah bahasa pemrograman, kamu memberikan jawaban-jawaban (biasanya disebut labels) bersamaan dengan data yang ada, dan selanjutnya mesin akan menyimpulkan aturan-aturan yang menentukan hubungan antara jawaban dan data. Sebagai contohnya, deteksi aktifitas olahraga mungkin akan terlihat seperti ini dalam konteks ML:

Kamu mengumpulkan data dan label yang sangat banyak sehingga bisa dengan efektif bilang, “Kalo jalan tuh gini loh,” atau “Kalo lari tuh gini loh.” Selanjutnya, dari dataset tersebut komputer bisa menyimpulkan aturan-aturan yang menentukan pola-pola yang menjelaskan aktifitas tertentu.

Bukan hanya menjadi metode alternatif dari pemrograman, metode ini juga memberikan kemampuan baru untuk skenario-skenario baru, misalnya menentukan pola-pola kegiatan bermain golf yang tidak mungkin dilakukan dengan pemrograman tradisional.

Dalam pemrograman tradisional, kode kamu terkompilasi menjadi sebuah binary yang biasanya disebut sebagai program. Pada ML, output yang kamu bangun dari data dan labels disebut model.

Jadi, jika kita kembali lagi ke diagram ini:

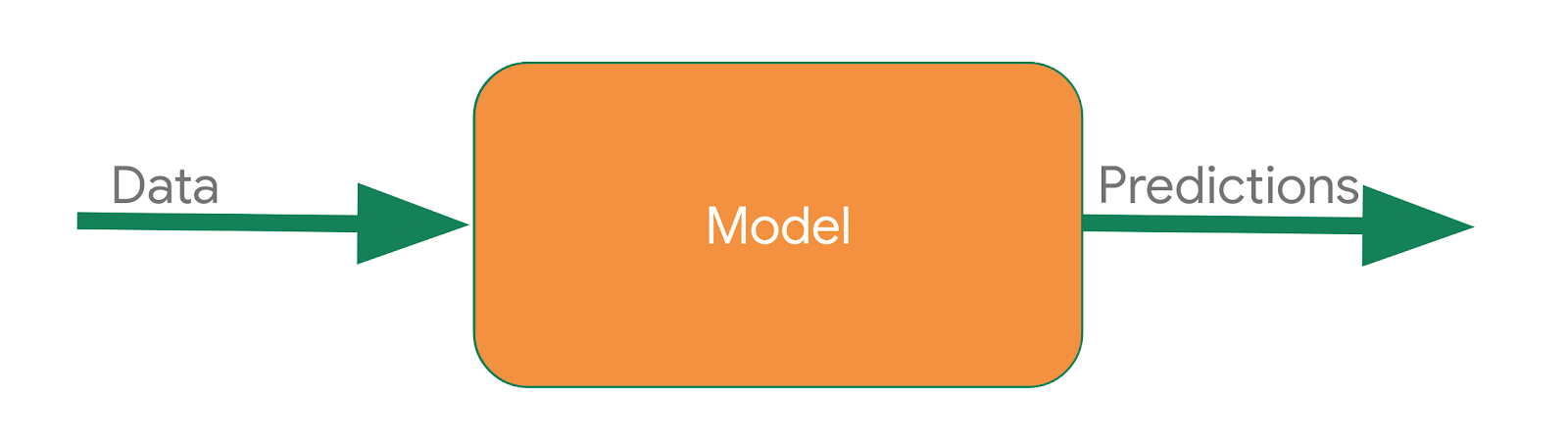

Output dari diagram flow di atas adalah model, dan kita bisa menggunakannya sebagai berikut:

Dimana kamu memberikan data sebagai input dan model menggunakan aturan-aturan yang disimpulakn dari proses pembelajaran mesin untuk menghasilkan prediction, misalnya, “Data ini terlihat seperti orang berjalan” atau “Data ini terlihat seperti orang bersepeda.”

3. Membuat ML model pertama kamu#

Perhatikan deretan-deretan angka di bawah. Apakah kam bisa melihat hubungan antara mereka?

X |

Y |

|---|---|

-1 |

-2 |

0 |

1 |

1 |

4 |

2 |

7 |

3 |

10 |

4 |

13 |

Kamu mungkin sadar bahwa nilai X bertambah 1 setiap barisnya dan nilai Y bertambah 3. Kamu mungkin berpikir bahwa Y sama dengan 3X ditambah atau dikurangi suatu angka. Selanjutnya kamu melihat ketika X=0 dan Y=1, kamu akan menyimpulkan bahwa Y=3X+1.

Yang baru saja kamu lakukan mirip persis dengan bagaimana kamu melatih ML model untuk melihat pola pada data!

Sekarang, ayoi kita lihat kode untuk melakukannya.

Bagaimana kamu melatih sebuah neural network untuk melakukan task serupa? Dengan menggunakan data! Kita harus memberikan data himpunan X dan Y kepada neural network sehingga ia mampu mengenali hubungan antara himpunan X dan Y.

Import#

Mulai dengan meng-import library yang dibutuhkan. Kamu akan menggunakan TensorFlow dan memberi alias tf agar lebih mudah digunakan.

Selanjutnya, import numpy untuk merepresentasikan data sebagai lists secara mudah dan cepat.

Terakhir, kita akan menggunakan keras, sebuah framework untuk membuat neural network sebagai kumpulan layer-layer berurutan

import numpy as np

import tensorflow as tf

from tensorflow import keras

Menentukan dan mengkompilasi jaringan neural#

Selanjutnya, kita akan membuat neural network sederhana. Neural networknya hanya memiliki satu layer, layer tersebut hanya memiliki satu neuron, dan input shape nya hanya satu.

model = tf.keras.Sequential([keras.layers.Dense(units=1, input_shape=[1])])

model.summary()

Model: "sequential_1"

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━┓ ┃ Layer (type) ┃ Output Shape ┃ Param # ┃ ┡━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━┩ │ dense_1 (Dense) │ (None, 1) │ 2 │ └─────────────────────────────────┴────────────────────────┴───────────────┘

Total params: 2 (8.00 B)

Trainable params: 2 (8.00 B)

Non-trainable params: 0 (0.00 B)

Selanjutnya, kita akan menulis kode untuk mengkompilasi neural network kita. Untuk melakukannya, kamu perlu membuat dua funsi– fungi loss dan optimizer.

Pada contoh kali ini, kamu telah mengetahui bahwa hubungan antara angka-angka di atas adalah Y=3X+1.

Namun, ketika komputer mencoba untuk mempelajari hal ini, komputer akan mencoba membuat tebakan, bisa jadi tebakan pertamanya adalah Y=10X+10. Fungsi loss digunakan untuk mengukur jarak antara hasil perhitungan menggunakan fungsi tebakan dengan jawaban sesungguhnya, apakah bagus atau buruk.

Selanjutnya, model akan menggunakan fungsi optimizer untuk membuat tebakan selanjutnya. Berdasarkan hasil dari fungsi loss, fungsi optimizer akan mencoba meminimalisir nilai loss. Pada titik ini, komputer mungkin akan menebak menggunakan Y=5X+5. Walaupun tebakannya masih jelek, tapi komputer sudah mendekati ke jawaban yang benar (karena nilai loss nya mengecil).

Nah, model mengulangi hal di atas terus menerus sampai batas epochs, dimana akan kamu lihat sebentar lagi.

Pertama-tama, kita akan menggunakan fungsi mean_squared_error untuk fungsi loss dan stochastic gradient descent (sgd) untuk fungsi optimizer. Kamu belum perlu tahu rumus matematika dibalik layar fungsi-fungsi tersebut, tetapi kamu bisa melihat kalau mereka ampuh!

Seiring berjalannya waktu, kamu akan belajar berbagai macam fungsi-fungsi yang bisa ditentukan untuk loss dan optimizer di skenario-skenario berbeda.

model.compile(optimizer="sgd", loss="mean_squared_error")

Berikan data#

Selanjutnya, kita akan memberikan data. Pada kasus kali ini, kita akan menggunakan enam angka X dan Y dari sebelumnya.

Kita akan menggunakan NumPy untuk membuat array:

xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float)

ys = np.array([-2.0, 1.0, 4.0, 7.0, 10.0, 13.0], dtype=float)

Sekarang kamu sudah selesai menulis kode yang mendefiniskan sebuah neural network! Langkah selanjutnya adalah melakukan model training agar neural network kamu bisa menyimpulkan pola-pola antara angka-angka di atas dan menggunakannya untuk membuat model.

4. Train the neural network#

Proses training neural network untuk mempelajari hubungan antara nilai-nilai X dan Y dapat dimulai dengan memanggil fungsi model.fit. Menggunakan fungsi ini, neural network akan berulang kali melakukan tebakan, mengukur berapa bagus tebakannya (nilai loss), atau menggunakan optimizer untuk membuat tebakan lain. Neural network akan melakukan perulangan (looping) sesuai dengan jumlah epochs yang kamu tentukan. Ketika kamu menjalankan fungsi model.fit kamu akan melihat nilai loss pada setiap epoch.

model.fit(xs, ys, epochs=500)

Epoch 1/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 87ms/step - loss: 14.2421

Epoch 2/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 11.2341

Epoch 3/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.8670

Epoch 4/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 7.0041

Epoch 5/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 5.5379

Epoch 6/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.3838

Epoch 7/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.4752

Epoch 8/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.7599

Epoch 9/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.1966

Epoch 10/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.7528

Epoch 11/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.4032

Epoch 12/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.1277

Epoch 13/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.9104

Epoch 14/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.7390

Epoch 15/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.6037

Epoch 16/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.4967

Epoch 17/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.4122

Epoch 18/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.3452

Epoch 19/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 29ms/step - loss: 0.2921

Epoch 20/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.2498

Epoch 21/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.2162

Epoch 22/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.1894

Epoch 23/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.1678

Epoch 24/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.1505

Epoch 25/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.1365

Epoch 26/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.1251

Epoch 27/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.1158

Epoch 28/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.1081

Epoch 29/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.1017

Epoch 30/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0963

Epoch 31/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0917

Epoch 32/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0878

Epoch 33/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0844

Epoch 34/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0814

Epoch 35/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0788

Epoch 36/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0764

Epoch 37/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0742

Epoch 38/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 33ms/step - loss: 0.0722

Epoch 39/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0703

Epoch 40/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0686

Epoch 41/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0669

Epoch 42/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0654

Epoch 43/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0639

Epoch 44/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0624

Epoch 45/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0611

Epoch 46/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0597

Epoch 47/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0585

Epoch 48/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0572

Epoch 49/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0560

Epoch 50/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 34ms/step - loss: 0.0548

Epoch 51/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0537

Epoch 52/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0526

Epoch 53/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0515

Epoch 54/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0504

Epoch 55/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0494

Epoch 56/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0483

Epoch 57/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0473

Epoch 58/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 0.0464

Epoch 59/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0454

Epoch 60/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0445

Epoch 61/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0436

Epoch 62/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 39ms/step - loss: 0.0427

Epoch 63/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0418

Epoch 64/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0409

Epoch 65/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0401

Epoch 66/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0393

Epoch 67/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0385

Epoch 68/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0377

Epoch 69/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0369

Epoch 70/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0361

Epoch 71/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0354

Epoch 72/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0347

Epoch 73/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 49ms/step - loss: 0.0339

Epoch 74/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0333

Epoch 75/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0326

Epoch 76/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0319

Epoch 77/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0312

Epoch 78/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0306

Epoch 79/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0300

Epoch 80/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0294

Epoch 81/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0288

Epoch 82/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0282

Epoch 83/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0276

Epoch 84/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0270

Epoch 85/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0265

Epoch 86/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 17ms/step - loss: 0.0259

Epoch 87/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 0.0254

Epoch 88/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0249

Epoch 89/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 17ms/step - loss: 0.0244

Epoch 90/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0239

Epoch 91/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0234

Epoch 92/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0229

Epoch 93/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0224

Epoch 94/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0220

Epoch 95/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0215

Epoch 96/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0211

Epoch 97/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 0.0206

Epoch 98/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0202

Epoch 99/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0198

Epoch 100/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0194

Epoch 101/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0190

Epoch 102/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0186

Epoch 103/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0182

Epoch 104/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0178

Epoch 105/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 47ms/step - loss: 0.0175

Epoch 106/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 0.0171

Epoch 107/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0168

Epoch 108/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0164

Epoch 109/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0161

Epoch 110/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0158

Epoch 111/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0154

Epoch 112/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0151

Epoch 113/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0148

Epoch 114/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0145

Epoch 115/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0142

Epoch 116/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0139

Epoch 117/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0136

Epoch 118/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0133

Epoch 119/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0131

Epoch 120/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0128

Epoch 121/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0125

Epoch 122/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0123

Epoch 123/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0120

Epoch 124/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 0.0118

Epoch 125/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 0.0115

Epoch 126/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 0.0113

Epoch 127/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0111

Epoch 128/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0108

Epoch 129/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0106

Epoch 130/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0104

Epoch 131/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0102

Epoch 132/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0100

Epoch 133/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0098

Epoch 134/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0096

Epoch 135/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0094

Epoch 136/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0092

Epoch 137/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0090

Epoch 138/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0088

Epoch 139/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0086

Epoch 140/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 0.0085

Epoch 141/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0083

Epoch 142/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0081

Epoch 143/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0079

Epoch 144/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0078

Epoch 145/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0076

Epoch 146/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0075

Epoch 147/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0073

Epoch 148/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0072

Epoch 149/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0070

Epoch 150/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0069

Epoch 151/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0067

Epoch 152/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0066

Epoch 153/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0065

Epoch 154/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0063

Epoch 155/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0062

Epoch 156/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0061

Epoch 157/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0059

Epoch 158/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0058

Epoch 159/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0057

Epoch 160/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0056

Epoch 161/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0055

Epoch 162/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0054

Epoch 163/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0052

Epoch 164/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0051

Epoch 165/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 15ms/step - loss: 0.0050

Epoch 166/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0049

Epoch 167/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0048

Epoch 168/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0047

Epoch 169/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0046

Epoch 170/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0045

Epoch 171/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0044

Epoch 172/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0043

Epoch 173/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0043

Epoch 174/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0042

Epoch 175/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0041

Epoch 176/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0040

Epoch 177/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0039

Epoch 178/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0038

Epoch 179/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0038

Epoch 180/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0037

Epoch 181/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0036

Epoch 182/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0035

Epoch 183/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0035

Epoch 184/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0034

Epoch 185/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0033

Epoch 186/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0033

Epoch 187/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0032

Epoch 188/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 0.0031

Epoch 189/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0031

Epoch 190/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0030

Epoch 191/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0029

Epoch 192/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0029

Epoch 193/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0028

Epoch 194/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0028

Epoch 195/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0027

Epoch 196/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0026

Epoch 197/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0026

Epoch 198/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0025

Epoch 199/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0025

Epoch 200/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0024

Epoch 201/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0024

Epoch 202/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0023

Epoch 203/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0023

Epoch 204/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0022

Epoch 205/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0022

Epoch 206/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0021

Epoch 207/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0021

Epoch 208/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0021

Epoch 209/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0020

Epoch 210/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0020

Epoch 211/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0019

Epoch 212/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0019

Epoch 213/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0019

Epoch 214/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0018

Epoch 215/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0018

Epoch 216/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0017

Epoch 217/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0017

Epoch 218/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0017

Epoch 219/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0016

Epoch 220/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0016

Epoch 221/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0016

Epoch 222/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0015

Epoch 223/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0015

Epoch 224/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0015

Epoch 225/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0014

Epoch 226/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 0.0014

Epoch 227/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0014

Epoch 228/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0014

Epoch 229/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0013

Epoch 230/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 35ms/step - loss: 0.0013

Epoch 231/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0013

Epoch 232/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0013

Epoch 233/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 0.0012

Epoch 234/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0012

Epoch 235/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0012

Epoch 236/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 0.0012

Epoch 237/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0011

Epoch 238/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0011

Epoch 239/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0011

Epoch 240/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 0.0011

Epoch 241/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0010

Epoch 242/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 0.0010

Epoch 243/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 9.9661e-04

Epoch 244/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 9.7614e-04

Epoch 245/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 9.5609e-04

Epoch 246/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 9.3644e-04

Epoch 247/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 9.1721e-04

Epoch 248/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.9837e-04

Epoch 249/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.7992e-04

Epoch 250/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.6185e-04

Epoch 251/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.4414e-04

Epoch 252/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 8.2680e-04

Epoch 253/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 8.0982e-04

Epoch 254/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 7.9318e-04

Epoch 255/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 7.7689e-04

Epoch 256/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 7.6093e-04

Epoch 257/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.4530e-04

Epoch 258/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 7.2999e-04

Epoch 259/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 7.1500e-04

Epoch 260/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.0031e-04

Epoch 261/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 6.8593e-04

Epoch 262/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 6.7184e-04

Epoch 263/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.5803e-04

Epoch 264/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 6.4452e-04

Epoch 265/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 6.3128e-04

Epoch 266/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.1831e-04

Epoch 267/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 6.0561e-04

Epoch 268/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.9317e-04

Epoch 269/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.8099e-04

Epoch 270/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.6906e-04

Epoch 271/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.5737e-04

Epoch 272/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 5.4592e-04

Epoch 273/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.3471e-04

Epoch 274/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 5.2372e-04

Epoch 275/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.1297e-04

Epoch 276/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.0243e-04

Epoch 277/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.9211e-04

Epoch 278/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 4.8200e-04

Epoch 279/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.7210e-04

Epoch 280/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.6240e-04

Epoch 281/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.5290e-04

Epoch 282/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.4360e-04

Epoch 283/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 4.3449e-04

Epoch 284/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 4.2556e-04

Epoch 285/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.1682e-04

Epoch 286/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.0826e-04

Epoch 287/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.9987e-04

Epoch 288/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.9166e-04

Epoch 289/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.8362e-04

Epoch 290/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.7573e-04

Epoch 291/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.6801e-04

Epoch 292/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 3.6046e-04

Epoch 293/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 3.5305e-04

Epoch 294/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.4580e-04

Epoch 295/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 3.3869e-04

Epoch 296/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 3.3174e-04

Epoch 297/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 3.2492e-04

Epoch 298/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 3.1825e-04

Epoch 299/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 3.1171e-04

Epoch 300/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 3.0531e-04

Epoch 301/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.9904e-04

Epoch 302/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.9290e-04

Epoch 303/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.8688e-04

Epoch 304/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.8098e-04

Epoch 305/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.7521e-04

Epoch 306/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.6956e-04

Epoch 307/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.6403e-04

Epoch 308/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.5860e-04

Epoch 309/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.5329e-04

Epoch 310/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.4809e-04

Epoch 311/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.4299e-04

Epoch 312/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.3800e-04

Epoch 313/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.3311e-04

Epoch 314/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.2832e-04

Epoch 315/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.2364e-04

Epoch 316/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.1904e-04

Epoch 317/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.1454e-04

Epoch 318/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.1013e-04

Epoch 319/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.0582e-04

Epoch 320/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.0159e-04

Epoch 321/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.9745e-04

Epoch 322/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.9339e-04

Epoch 323/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1.8942e-04

Epoch 324/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 1.8553e-04

Epoch 325/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1.8172e-04

Epoch 326/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 1.7799e-04

Epoch 327/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.7433e-04

Epoch 328/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.7075e-04

Epoch 329/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.6724e-04

Epoch 330/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1.6381e-04

Epoch 331/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.6044e-04

Epoch 332/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.5715e-04

Epoch 333/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 1.5392e-04

Epoch 334/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.5076e-04

Epoch 335/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.4766e-04

Epoch 336/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.4463e-04

Epoch 337/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.4166e-04

Epoch 338/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.3875e-04

Epoch 339/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.3590e-04

Epoch 340/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.3311e-04

Epoch 341/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.3037e-04

Epoch 342/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.2769e-04

Epoch 343/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.2507e-04

Epoch 344/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.2250e-04

Epoch 345/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.1999e-04

Epoch 346/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.1752e-04

Epoch 347/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1.1511e-04

Epoch 348/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.1274e-04

Epoch 349/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 1.1043e-04

Epoch 350/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 1.0816e-04

Epoch 351/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1.0594e-04

Epoch 352/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 1.0376e-04

Epoch 353/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.0163e-04

Epoch 354/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 9.9544e-05

Epoch 355/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 9.7498e-05

Epoch 356/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 9.5495e-05

Epoch 357/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 9.3534e-05

Epoch 358/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 9.1613e-05

Epoch 359/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 8.9730e-05

Epoch 360/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 8.7887e-05

Epoch 361/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 8.6082e-05

Epoch 362/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 8.4313e-05

Epoch 363/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 8.2582e-05

Epoch 364/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - loss: 8.0885e-05

Epoch 365/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.9224e-05

Epoch 366/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.7597e-05

Epoch 367/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.6004e-05

Epoch 368/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 7.4443e-05

Epoch 369/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.2912e-05

Epoch 370/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.1415e-05

Epoch 371/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.9948e-05

Epoch 372/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.8512e-05

Epoch 373/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 6.7105e-05

Epoch 374/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.5726e-05

Epoch 375/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.4376e-05

Epoch 376/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.3053e-05

Epoch 377/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 6.1758e-05

Epoch 378/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.0489e-05

Epoch 379/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.9246e-05

Epoch 380/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.8031e-05

Epoch 381/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.6839e-05

Epoch 382/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.5672e-05

Epoch 383/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.4529e-05

Epoch 384/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.3408e-05

Epoch 385/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.2311e-05

Epoch 386/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.1237e-05

Epoch 387/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.0185e-05

Epoch 388/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.9154e-05

Epoch 389/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.8144e-05

Epoch 390/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 4.7156e-05

Epoch 391/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 4.6188e-05

Epoch 392/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 4.5239e-05

Epoch 393/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - loss: 4.4309e-05

Epoch 394/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 4.3398e-05

Epoch 395/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 4.2507e-05

Epoch 396/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.1633e-05

Epoch 397/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.0779e-05

Epoch 398/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.9942e-05

Epoch 399/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.9120e-05

Epoch 400/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 3.8318e-05

Epoch 401/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.7531e-05

Epoch 402/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.6759e-05

Epoch 403/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 3.6003e-05

Epoch 404/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.5264e-05

Epoch 405/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.4541e-05

Epoch 406/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.3831e-05

Epoch 407/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 3.3135e-05

Epoch 408/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 3.2455e-05

Epoch 409/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 3.1789e-05

Epoch 410/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 3.1135e-05

Epoch 411/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 3.0495e-05

Epoch 412/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 2.9869e-05

Epoch 413/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.9256e-05

Epoch 414/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 2.8656e-05

Epoch 415/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.8067e-05

Epoch 416/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 2.7490e-05

Epoch 417/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.6925e-05

Epoch 418/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.6373e-05

Epoch 419/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 2.5831e-05

Epoch 420/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.5301e-05

Epoch 421/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 2.4781e-05

Epoch 422/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.4273e-05

Epoch 423/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.3773e-05

Epoch 424/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.3286e-05

Epoch 425/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.2808e-05

Epoch 426/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 2.2339e-05

Epoch 427/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.1880e-05

Epoch 428/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.1431e-05

Epoch 429/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 2.0990e-05

Epoch 430/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 2.0559e-05

Epoch 431/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 2.0137e-05

Epoch 432/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.9723e-05

Epoch 433/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.9318e-05

Epoch 434/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.8921e-05

Epoch 435/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.8533e-05

Epoch 436/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.8152e-05

Epoch 437/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.7779e-05

Epoch 438/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.7414e-05

Epoch 439/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.7056e-05

Epoch 440/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.6706e-05

Epoch 441/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.6363e-05

Epoch 442/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.6026e-05

Epoch 443/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.5697e-05

Epoch 444/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.5375e-05

Epoch 445/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.5059e-05

Epoch 446/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.4749e-05

Epoch 447/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.4447e-05

Epoch 448/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.4150e-05

Epoch 449/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.3859e-05

Epoch 450/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.3574e-05

Epoch 451/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.3296e-05

Epoch 452/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.3022e-05

Epoch 453/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.2755e-05

Epoch 454/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.2493e-05

Epoch 455/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.2237e-05

Epoch 456/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.1985e-05

Epoch 457/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.1739e-05

Epoch 458/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.1498e-05

Epoch 459/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 1.1262e-05

Epoch 460/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.1031e-05

Epoch 461/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 1.0804e-05

Epoch 462/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.0582e-05

Epoch 463/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.0365e-05

Epoch 464/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 1.0152e-05

Epoch 465/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 9.9434e-06

Epoch 466/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 9.7392e-06

Epoch 467/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 9.5390e-06

Epoch 468/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 9.3433e-06

Epoch 469/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 9.1514e-06

Epoch 470/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 8.9629e-06

Epoch 471/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 8.7788e-06

Epoch 472/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.5991e-06

Epoch 473/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 8.4220e-06

Epoch 474/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 8.2494e-06

Epoch 475/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 8.0797e-06

Epoch 476/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 7.9138e-06

Epoch 477/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 7.7513e-06

Epoch 478/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 7.5920e-06

Epoch 479/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 8ms/step - loss: 7.4360e-06

Epoch 480/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.2836e-06

Epoch 481/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 7.1340e-06

Epoch 482/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.9870e-06

Epoch 483/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 6.8438e-06

Epoch 484/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.7037e-06

Epoch 485/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.5661e-06

Epoch 486/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 6.4307e-06

Epoch 487/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 14ms/step - loss: 6.2986e-06

Epoch 488/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.1693e-06

Epoch 489/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 6.0430e-06

Epoch 490/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 5.9181e-06

Epoch 491/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 5.7970e-06

Epoch 492/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.6777e-06

Epoch 493/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.5607e-06

Epoch 494/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.4468e-06

Epoch 495/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 11ms/step - loss: 5.3349e-06

Epoch 496/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 5.2249e-06

Epoch 497/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - loss: 5.1183e-06

Epoch 498/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 5.0129e-06

Epoch 499/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 9ms/step - loss: 4.9098e-06

Epoch 500/500

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step - loss: 4.8089e-06

<keras.src.callbacks.history.History at 0x14fd18770>

Yeay, proses training selesai!

Sebelum lanjut, ayo kita review lagi proses pembelajaran neural network kita.

Di awal epochs, kamu bisa melihat nilai loss yang begitu besar, tetapi terus mengecil seiring pengulangan selanjutnya. Ketika training selesai, nilai loss sangatlah kecil. Hal ini menunjukkan bahwa model kita memiliki performa yang sangat baik dalam menyimpulkan hubungan antara angka X dan Y.

Kamu mungkin sadar bahwa kamu tidak butuh 500 epochs dan kamu bisa mencoba bereksperimen dengan epochs berbeda. Seperti yang kamu lihat dari contoh di atas, nilai loss nya sudah sangat kecil setelah epochs ke-50!

Menggunakan model#

Kamu telah memiliki model yang telah di-training untuk mempelajari hubungan antara X dan Y. Kamu bisa menggunakan fungsi model.predict untuk mempredisksi nilai Y dari nilai X baru. Misalnya, jika nilai X nya adalah 10, berapakah nilai Y?

Coba kamu tebak sebelum menjalankan kode di bawah:

print(model.predict(np.array([10.0])))

1/1 ━━━━━━━━━━━━━━━━━━━━ 0s 10ms/step

[[31.006397]]

Kamu mungkin menebak jawabannya adalah 31, tapi hasil dari model sedikit berbeda. Mengapa begitu?

Neural network berurusan dengan probabilitas, sehingga neural network mengkalkulasio bahwa terdapat probabilitas yang sangat besar bahwa hubungan antara X dan Y adalah Y=3X+1, tapi dia tidak bisa menjawab dengan yakin hanya dengan menggunakan 6 data point. Hasilnya sangat dekat dengan 31, tapi belum tentu 31.

Semakin sering kamu menggunakan neural network, kamu akan semakin sering melihat pola seperti di atas terjadi. Kamu pasti akan selalu berurusan dengan probabilitas, bukan kepastian, dan akan melakukan sedikit coding untuk mnengetahui hasil berdasalkan probabilitas, terutama jika berurusan dengan klasifikasi.

Selamat! 🎉#

Percaya atau tidak, kamu telah mempelajari sebagian besar konsep ML yang dapat kamu gunakan dalam skenario yang lebih kompleks. Kamu telah mempelajari cara melatih neural network untuk mengetahui hubungan antara dua himpunan angka. Kamu telah membuat himpunan layers (walau dalam tutorial ini hanya satu lapisan) yang berisi neuron (juga dalam kasus ini, hanya satu), yang kemudian kamu kompilasi menggunakan fungsi loss dan optimizer.

Neural network, fungsi loss, dan fungsi optimizer dapat digunakan untuk proses menebak hubungan antara angka-angka, mengukur seberapa baik mereka melakukannya, lalu membuat parameter baru untuk tebakan baru. Begitulah cara kerja machine learning secara sederhananya.